Integrating fNIRS with VR/XR

There are many ways of synchronizing between fNIRS and virtual reality. When analyzing this integration, we first need to answer the question of whether VR is to be just a Head Mounted Display that displays stimuli to the user, or whether fNIRS is to act as a controller that influences events in virtual reality (VR). Each of these variants requires a tailored approach. At Cortivision, we tested each of them and developed the appropriate synchronization mechanisms. Depending on your experience, competence and resources, it is possible to use our integration with a simple but powerful tool from SilicoLabs – a platform used to create VR scenarios without the need for coding. This solution is dedicated to people who do not have a programming team capable of producing scenes in virtual reality. The prepared scenarios can be modified to apply them for the purposes of cognitive research, market research, spatial navigation optimization, impact and perception studies, or economy studies. Research in VR can also be enriched with eye-tracking data. Please contact us for more details concerning our fNIRS integration with SilicoLabs.

Cortivision Spotlight

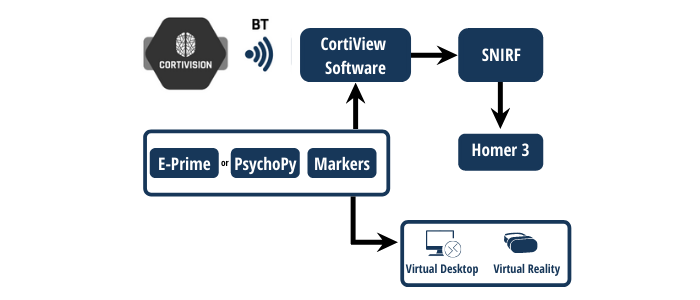

CortiVision SPOTLIGHT is a fully integrated environment to combine NIRS technology with Virtual Reality (VR) for research and training applications. Our demo system is created mainly for biofeedback practice enabling effective and engaging cognitive training based on near-infrared spectroscopy. This solution targets researchers and advanced users who are not afraid of engaging with the code for more customized scenarios and experimental procedures. The use of virtual reality allows for unprecedented control of the content that we provide to the test subject. For this reason, SPOTLIGHT is the perfect tool for scientists and professionals in the field of UX / UI and ergonomics. Check the following examples of scenarios you can implement with SPOTLIGHT:

1. Online processing

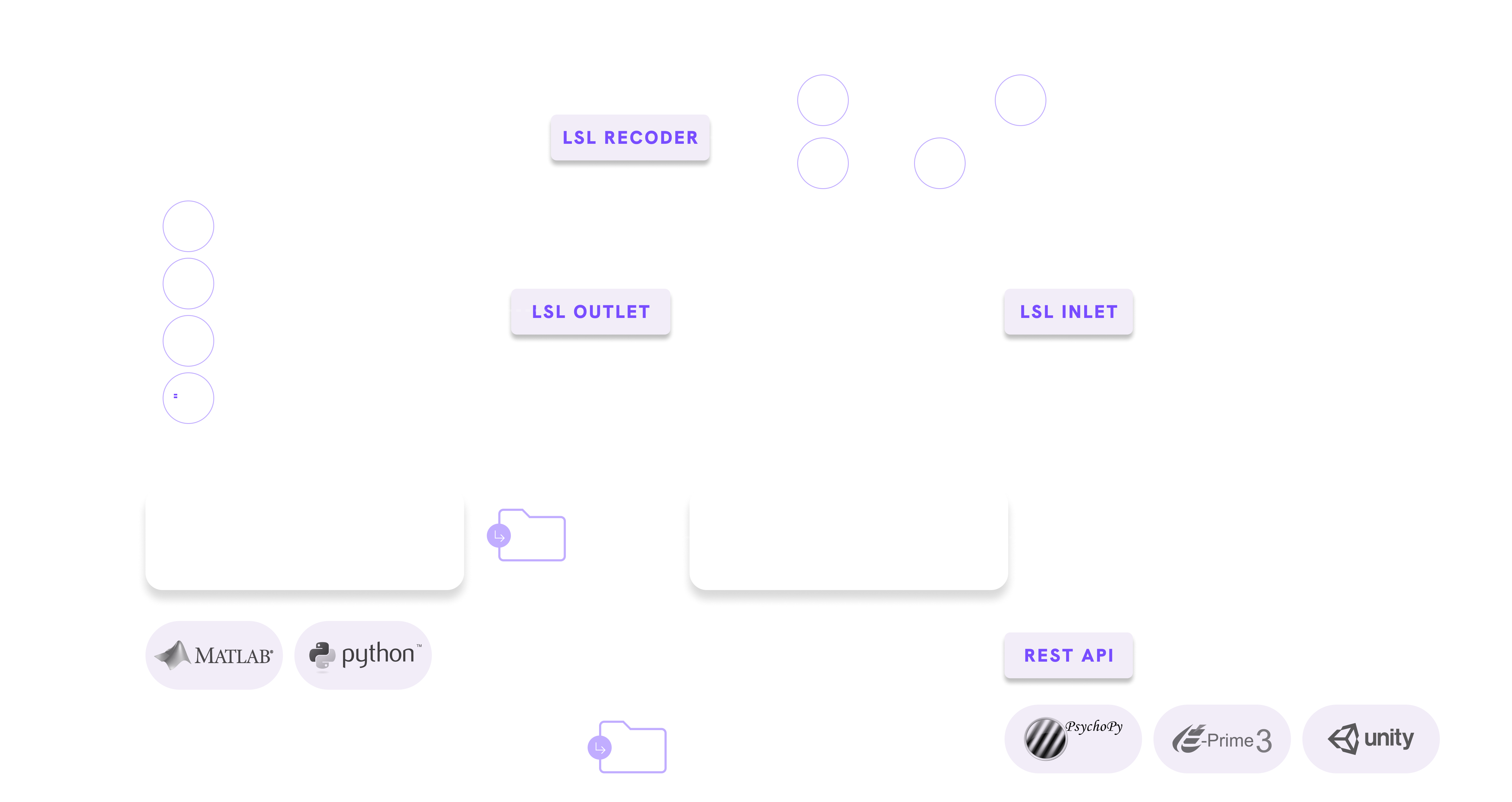

CortiView software enables data transmission via the LSL protocol to popular real-time signal processing software – OpenViBE. Classification results can then be used to control feedback in the Unity environment.

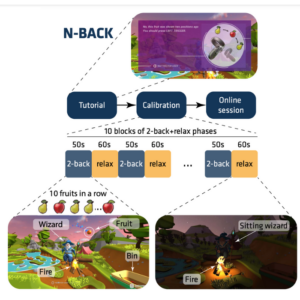

2. Attention training with full concentration

You can build a classic cognitive experiment (like an n-back task) and then use the recorded data as a signal for classification by machine learning algorithms. In the next phase, the control of the VR scene can be done in a neurofeedback loop.

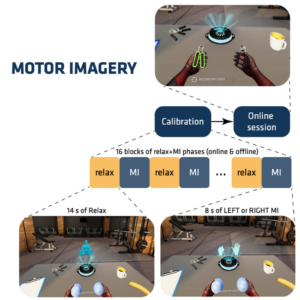

3. Motor functions training in your mind

The same protocol may have been used to recognize brain activity associated with limb movement. Which makes it a perfect tool for professionals in neurorehabilitation.

4. Full control of the experimental environment

Using Cortivision Spotlight and virtual desktop applications, you can present experimental stimuli in a distractor-free research environment. You can conduct your cognitive procedures without worrying about the influence of uncontrolled variables.

The above integration was developed within the project „BioVR – Diagnostic and training system for attention functions in virtual reality” co-financed in the amount of PLN 934,915.00 (total expenditure PLN 1,182,228.00). The final version of the system is aimed at increasing the availability of brain activity testing equipment for science and diagnostics, enabling high-quality testing of brain activity in non-lab conditions, enabling more precise diagnostics of attention functions and innovative training of attention functions in VR.